COMPSCI 180 Final Project 3: High Dynamic Range

Aishik Bhattacharyya & Kaitlyn Chen

Introduction:

Most modern camera can’t capture the full dynamic range of the world, and sometimes photographs will be under or over-exposed. This project will aim to combining multiple exposures of a scene into a high dynamic range radiance map and then converting this to an image for display using tone mapping. We used these two papers for support Debevec and Malik 1997 and Durand 2002. We will use the provided images from the spec, and will also use one of our own image sets at the end.

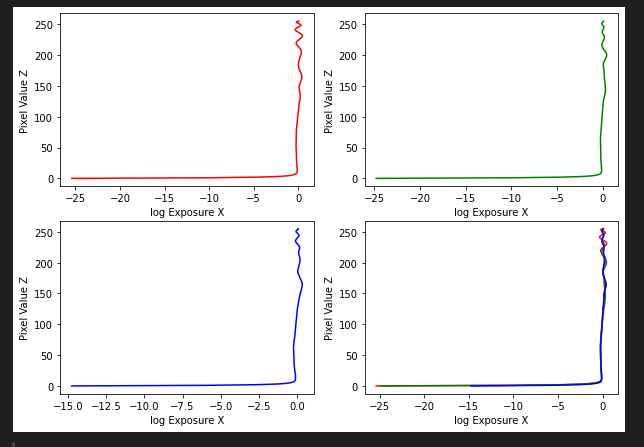

Calculate response function and log film irradiance values:

To accomplish this, we need the pixel values at certain locations in the image, log shutter speeds, lambda, and weighing function values. We initialize matrix A to solve for g and lE and vector b which has the log exposure values. We populate matrix A and b in accordance with the paper. Then, we set the midpoint of A to zero and the current k value we’re at. Then, for smoothness constraints, we set the values in A to the formulas, l * w[i] or -2 * l * w[i] in accordance with the paper. Finally, we use least squares to solve our result and get both answers.

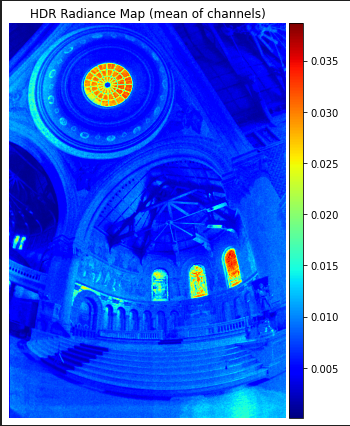

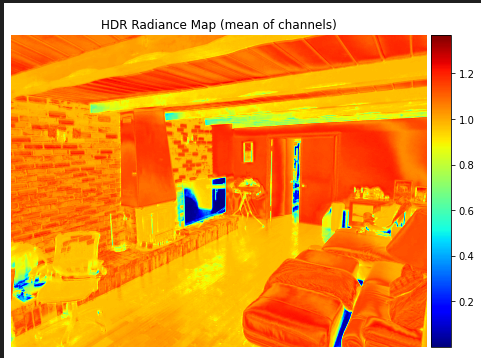

HDR Radiance Map Reconstruction:

We then created the function to reconstruct the HDR radiance map in accordance with the Debevec and Malik paper. For this, we need the stack image filenames, the response function g for each channel, the weighting function for each pixel value, the log shutter speed, and the integer representing the number of images by exposures. We read in the input images and convert them to RGB, and we loop through all the color channels and for each exposure, get the intensity values and apply the weighting function. We have the weighted contribution from log radiance and the sum of all the weights as well. Then, we get the HDR value by using the HDR equation and store them in our resulting array.

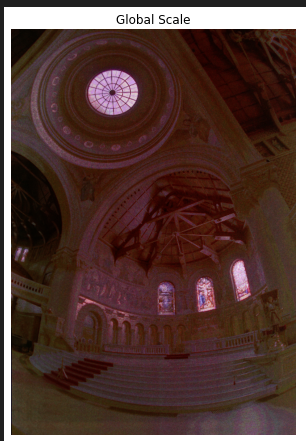

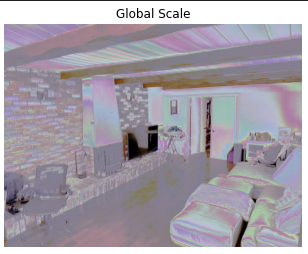

Global Tone Mapping:

Finally, we implement the tone-mapping algorithm for HDR images. There is a base and detail layer. The first is calculated by using a bilateral filter and the second by using the log luminance and base layer’s difference. Then, the base layer is compressed and combined with the detail layer. Finally, this is scaled back by dividing the HDR image by the given luminance and normalized to the tone-mapped luminance to get the final result.

Bells & Whistles - algorithm on our own photos:

input exposure images: